FLEX: Full-Body Grasping Without Full-Body Grasps

Columbia University

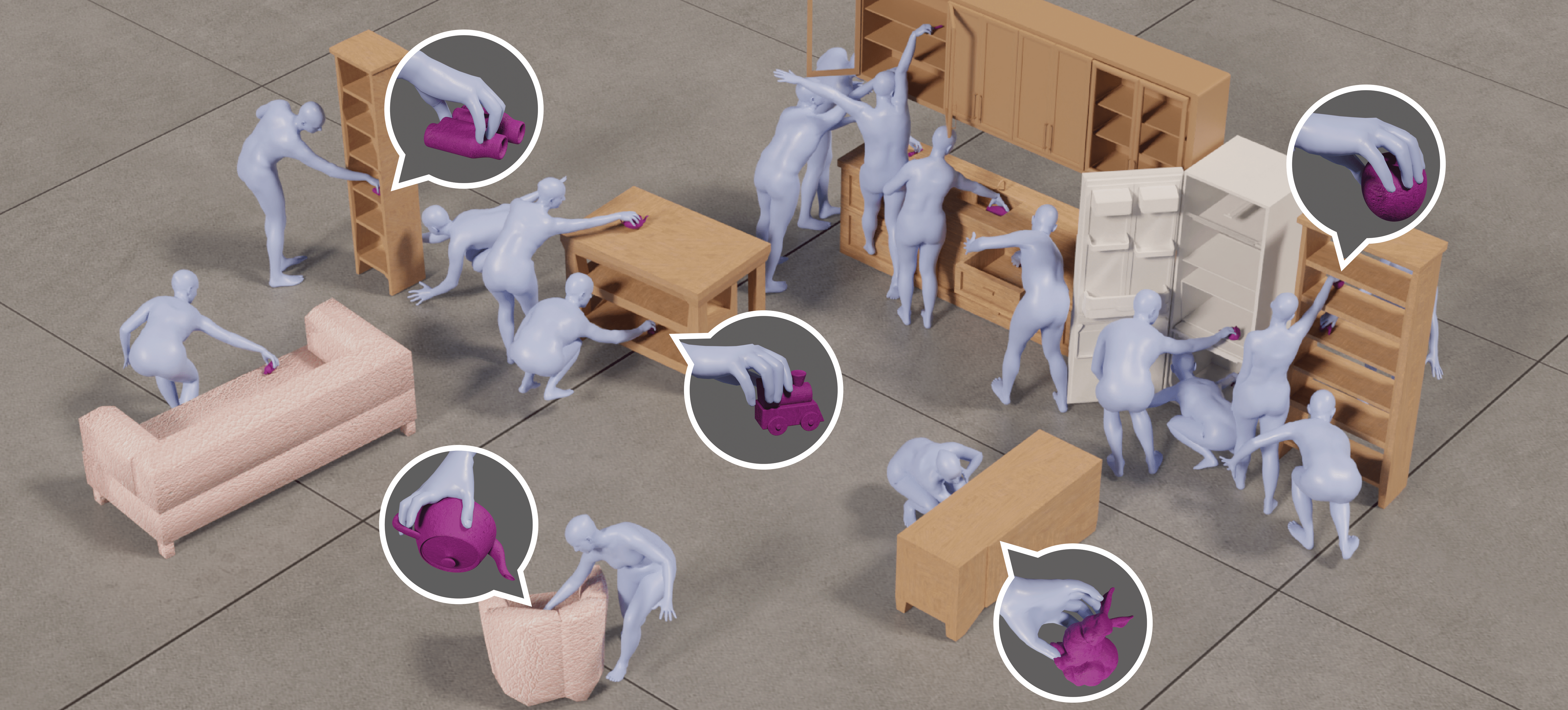

We address the task of generating diverse, realistic virtual humans — hands and full body — grasping everyday objects in everyday scenes without requiring any 3D full-body grasping data. Our key insight is to leverage the existence of both full-body pose and hand grasping priors, composing them using 3D geometrical and anatomical constraints to obtain full-body grasps. Click here to see a fun fly-through of the above scene.

Visualization of the optimization process

At first, the grasp (shown in yellow) penetrates through the cupboard. As the optimization progresses, the grasp evolves so as to not only avoid penetration with the cupboard, but also to allow the human (shown in blue) to best match it, while satisfying other constraints.

Qualitative Results

Click here to see interactive results and comparisons to baselines.Paper

Abstract

Synthesizing 3D human avatars interacting realistically with a scene is an important problem with applications in AR/VR, video games and robotics. Towards this goal, we address the task of generating a virtual human — hands and full body — grasping everyday objects. Existing methods approach this problem by collecting a 3D dataset of humans interacting with objects and training on this data. However, 1) these methods do not generalize to different object positions and orientations, or to the presence of furniture in the scene, and 2) the diversity of their generated full-body poses is very limited. In this work, we address all the above challenges to generate realistic, diverse full-body grasps in everyday scenes without requiring any 3D full-body grasping data. Our key insight is to leverage the existence of both full-body pose and hand grasping priors, composing them using 3D geometrical constraints to obtain full-body grasps. We empirically validate that these constraints can generate a variety of feasible human grasps that are superior to baselines both quantitatively and qualitatively.BibTeX Citation

@inproceedings{tendulkar2022flex,

title={FLEX: Full-Body Grasping Without Full-Body Grasps},

author={Tendulkar, Purva and Sur\'is, D\'idac and Vondrick, Carl},

booktitle = {Conference on Computer Vision and Pattern Recognition ({CVPR})},

year={2023}

}

Method

Method. Given a pre-trained right hand grasping model `G` that can predict global MANO parameters `{theta_h, t_h, R_h}` for a given object, as well as a pre-trained pose prior `P` that can generate feasible full-body poses `theta_b`, our approach called FLEX (Full-body Latent Exploration) generates a 3D human grasping the desired object. To do so, FLEX searches in the latent spaces of `G` and `P` to find the latent variables w and v, respectively, as well as over the space of approaching angles `alpha`, SMPL-X translations `t_b` and global orientations `R_b`, which are represented in 'yaw-pitch-roll' format. This search, or latent-space exploration, is done via model inversion, by backpropagating the gradient of a loss at the output of our model, and finding the inputs that minimize it. This procedure is done iteratively, until the loss (comprising of hand matching, obstacle and gaze losses) is minimized. Additionally, our data-driven pose-ground prior ensures that the pose is reasonable with respect to the ground. During the search, we keep the weights of G and P frozen. Therefore, we do not perform any training; the procedure takes place at inference time. In the figure, the parameters we optimize are shown in yellow, the differentiable (frozen) layers are shown in pink, and the activations are shown in blue.

Dataset

We contribute the ReplicaGrasp dataset which is created by spawning objects from GRAB into the ReplicaCAD scenes, simulated in random positions and orientations using the Habitat simulator. We capture 4,800 instances, with 50 different objects spawned in one of 48 receptacles in both, upright and randomly fallen orientations.

Video Presentation

Acknowledgements

We thank Harsh Agrawal for his helpful feedback, and Alexander Clegg for his help with the Habitat simulator. This research is based on work partially supported by NSF NRI Award #2132519, and the DARPA MCS program under Federal Agreement No. N660011924032. Dídac Surís is supported by the Microsoft PhD fellowship. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies, either expressed or implied, of the sponsors. The webpage template was inspired by this project page.